How and Where Concurrent Asynchronous I/O with ASP.NET Web API

Related Posts

When we have uncorrelated multiple I/O operations that need to be kicked off, we have quite a few ways to fire them off and which way you choose makes a great amount of difference on a .NET server side application. Pablo Cibraro already has a great post on this topic (await, WhenAll, WaitAll, oh my!!) which I recommend you to check that out. In this article, I would like to touch on a few more points. Let's look at the options one by one. I will use a multiple HTTP request scenario here which will be consumed by an ASP.NET Web API application but this is applicable for any sort of I/O operations (long-running database calls, file system operations, etc.).

We will have two different endpoint which will hit to consume the data:

- http://localhost:2700/api/cars/cheap

- http://localhost:2700/api/cars/expensive

As we can infer from the URI, one of them will get us the cheap cars and the other one will get us the expensive ones. I created a separate ASP.NET Web API application to simulate these endpoints. Each one takes more than 500ms to complete and in our target ASP.NET Web API application, we will aggregate these two resources together and return the result. Sounds like a very common scenario.

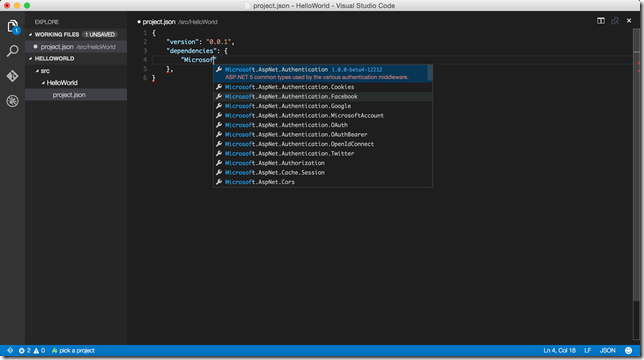

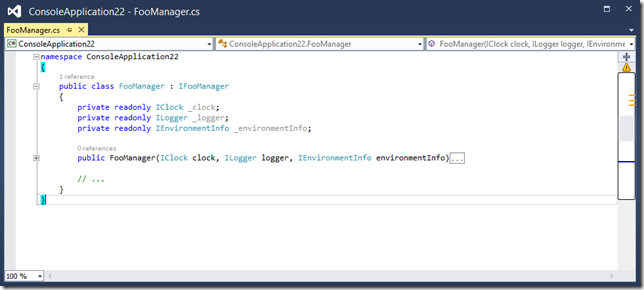

Inside our target API controller, we have the following initial structure:

public class Car { public int Id { get; set; } public string Make { get; set; } public string Model { get; set; } public int Year { get; set; } public float Price { get; set; } } public class CarsController : BaseController { private static readonly string[] PayloadSources = new[] { "http://localhost:2700/api/cars/cheap", "http://localhost:2700/api/cars/expensive" }; private async Task<IEnumerable<Car>> GetCarsAsync(string uri) { using (HttpClient client = new HttpClient()) { var response = await client.GetAsync(uri).ConfigureAwait(false); var content = await response.Content .ReadAsAsync<IEnumerable<Car>>().ConfigureAwait(false); return content; } } private IEnumerable<Car> GetCars(string uri) { using (WebClient client = new WebClient()) { string carsJson = client.DownloadString(uri); IEnumerable<Car> cars = JsonConvert .DeserializeObject<IEnumerable<Car>>(carsJson); return cars; } } }

We have a Car class which will represent a car object that we are going to deserialize from the JSON payload. Inside the controller, we have our list of endpoints and two private methods which are responsible to make HTTP GET requests against the specified URI. GetCarsAsync method uses the System.Net.Http.HttpClient class, which has been introduces with .NET 4.5, to make the HTTP calls asynchronously. With the new C# 5.0 asynchronous language features (A.K.A async modifier and await operator), it is pretty straight forward to write the asynchronous code as you can see. Note that we used ConfigureAwait method here by passing the false Boolean value for continueOnCapturedContext parameter. It’s a quite long topic why we need to do this here but briefly, one of our samples, which we are about to go deep into, would introduce deadlock if we didn’t use this method.

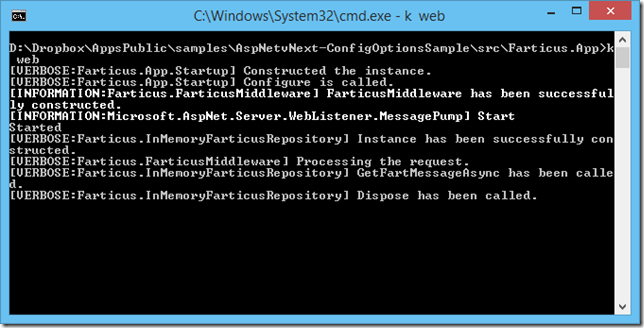

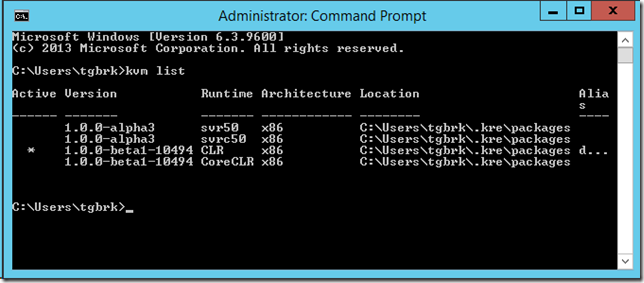

To be able to measure the performance, we will use a little utility tool from Apache Benchmarking Tool (A.K.A ab.exe). This comes with Apache Web Server installation but you don’t actually need to install it. When you download the necessary ZIP file for the installation and extract it, you will find the ab.exe inside. Alternatively, you may use Web Capacity Analysis Tool (WCAT) from IIS team. It’s a lightweight HTTP load generation tool primarily designed to measure the performance of a web server within a controlled environment. However, WCAT is a bit hard to grasp and set up. That’s why we used ab.exe here for simple load tests.

Please, note that the below compressions are poor and don't indicate any real benchmarking. These are just compressions for demo purposes and they indicate the points that we are looking for.

Synchronous and not In Parallel

First, we will look at all synchronous and not in parallel version of the code. This operation will block the running the thread for the amount of time which takes to complete two network I/O operations. The code is very simple thanks to LINQ.

[HttpGet] public IEnumerable<Car> AllCarsSync() { IEnumerable<Car> cars = PayloadSources.SelectMany(x => GetCars(x)); return cars; }

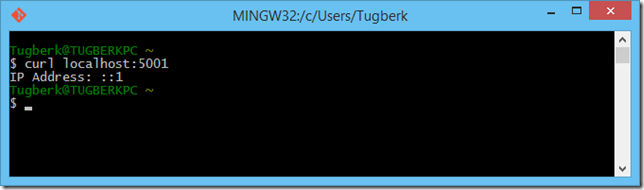

For a single request, we expect this to complete for about a second.

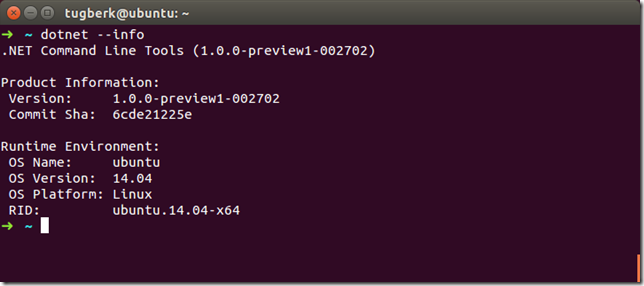

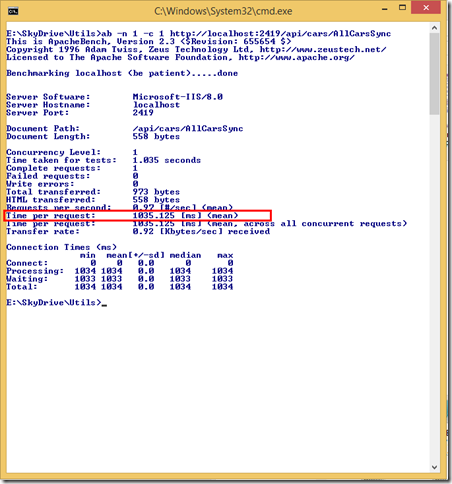

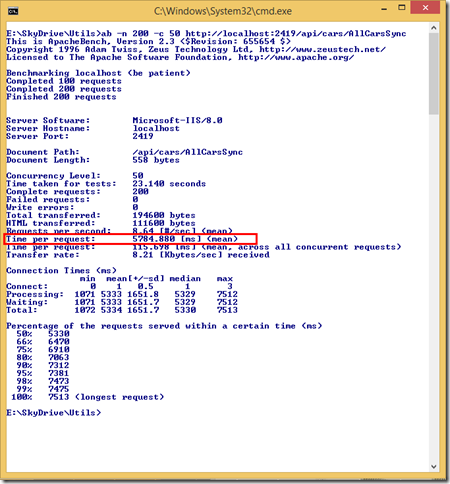

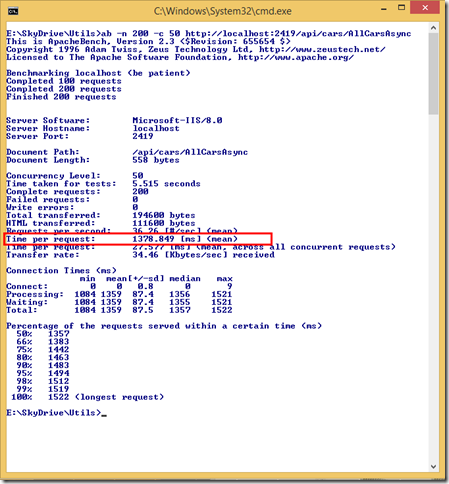

The result is not surprising. However, when you have multiple concurrent requests against this endpoint, you will see that the blocking threads will be the bottleneck for your application. The following screenshot shows the 200 requests to this endpoint in 50 requests blocks.

The result is now worse and we are paying the price for blowing the threads for long running I/O operations. You may think that running these in-parallel will reduce the single request time and you are not wrong but this has its own caveats, which is our next section.

Synchronous and In Parallel

This option is mostly never good for your application. With this option, you will perform the I/O operations in parallel and the request time will be significantly reduced if you try to measure only with one request. However, in our sample case here, you will be consuming two threads instead of one to process the request and you will block both of them while waiting for the HTTP requests to complete. Although this reduces the overall request processing time for a single request, it consumes more resources and you will see that the overall request time increases while your request count increases. Let’s look at the code of the ASP.NET Web API controller action method.

[HttpGet] public IEnumerable<Car> AllCarsInParallelSync() { IEnumerable<Car> cars = PayloadSources.AsParallel() .SelectMany(uri => GetCars(uri)).AsEnumerable(); return cars; }

We used “Parallel LINQ (PLINQ)” feature of .NET framework here to process the HTTP requests in parallel. As you can, it was just too easy; in fact, it was only one line of digestible code. I tent to see a relationship between the above code and tasty donuts. They all look tasty but they will work as hard as possible to clog our carotid arteries. Same applies to above code: it looks really sweet but can make our server application miserable. How so? Let’s send a request to this endpoint to start seeing how.

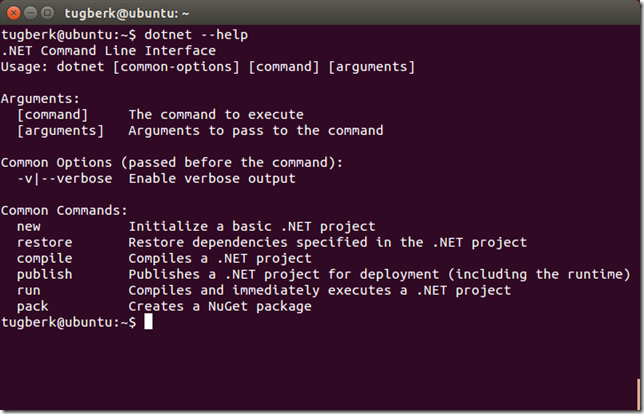

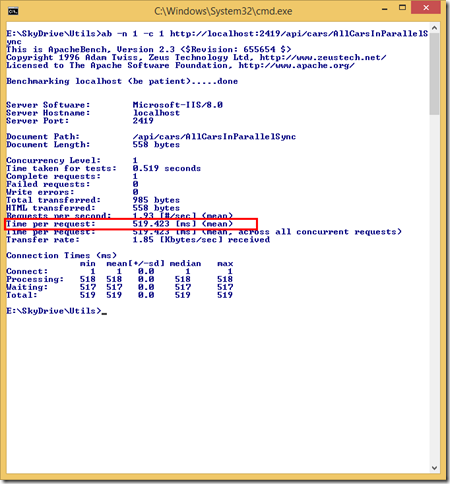

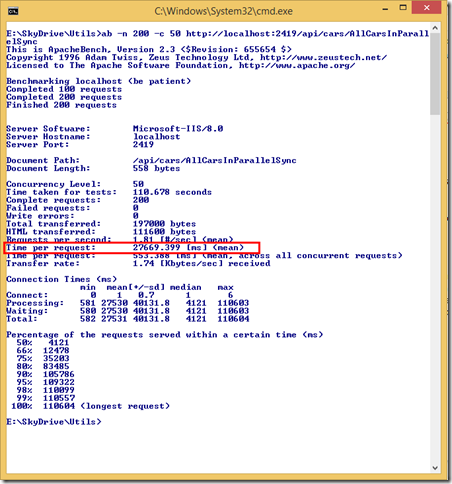

As you can see, the overall request time has been reduced in half. This must be good, right? Not completely. As mentioned before, this is going to hurt us if we see too many requests coming to this endpoint. Let’s simulate this with ab.exe and send 200 requests to this endpoint in 50 requests blocks.

The overall performance is now significantly reduced. So, where would this type of implementation make sense? If your server application has small number of users (for example, an HTTP API which consumed by the internal applications within your small team), this type of implementation may give benefits. However, as it’s now annoyingly simple to write asynchronous code with built-in language features, I’d suggest you to choose our last option here: “Asynchronous and In Parallel (In a Non-Blocking Fashion)”.

Asynchronous and not In Parallel

Here, we won’t introduce any concurrent operations and we will go through each request one by one but in an asynchronous manner so that the processing thread will be freed up during the dead waiting period.

[HttpGet] public async Task<IEnumerable<Car>> AllCarsAsync() { List<Car> carsResult = new List<Car>(); foreach (var uri in PayloadSources) { IEnumerable<Car> cars = await GetCarsAsync(uri); carsResult.AddRange(cars); } return carsResult; }

What we do here is quite simple: we are iterating through the URI array and making the asynchronous HTTP call for each one. Notice that we were able to use the await keyword inside the foreach loop. This is all fine. The compiler will do the right thing and handle this for us. One thing to keep in mind here is that the asynchronous operations won’t run in parallel here. So, we won’t see a difference when we send a single request to this endpoint as we are going through the each request one by one.

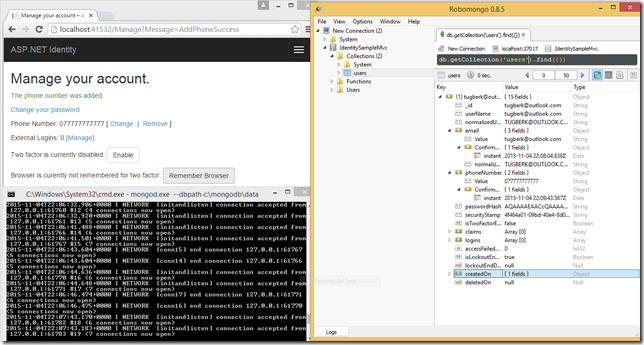

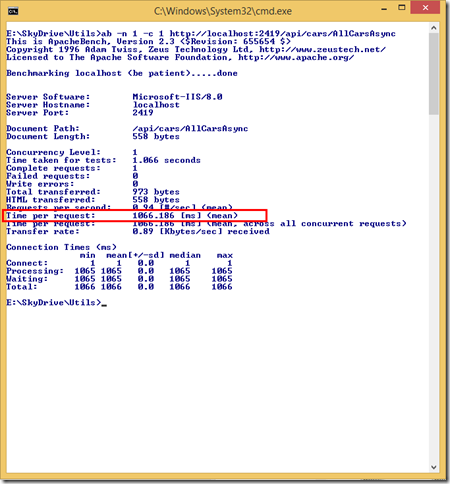

As expected, it took around a second. When we increase the number of requests and concurrency level, we will see that the average request time still stays around a second to perform.

This option is certainly better than the previous ones. However, we can still do better in some certain cases where we have limited number of concurrent I/O operations. The last option will look into this solution but before moving onto that, we will look at one other option which should be avoided where possible.

Asynchronous and In Parallel (In a Blocking Fashion)

Among these options shown here, this is the worst one that one can choose. When we have multiple Task returning asynchronous methods in our hand, we can wait all of them to finish with WaitAll static method on Task object. This results several overheads: you will be consuming the asynchronous operations in a blocking fashion and if these asynchronous methods is not implemented right, you will end up with deadlocks. At the beginning of this article, we have pointed out the usage of ConfigureAwait method. This was for preventing the deadlocks here. You can learn more about this from the following blog post: Asynchronous .NET Client Libraries for Your HTTP API and Awareness of async/await's Bad Effects.

Let’s look at the code:

[HttpGet] public IEnumerable<Car> AllCarsInParallelBlockingAsync() { IEnumerable<Task<IEnumerable<Car>>> allTasks = PayloadSources.Select(uri => GetCarsAsync(uri)); Task.WaitAll(allTasks.ToArray()); return allTasks.SelectMany(task => task.Result); }

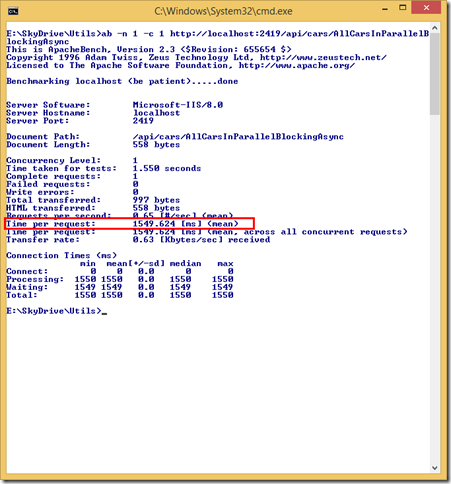

Let's send a request to this endpoint to see how it performs:

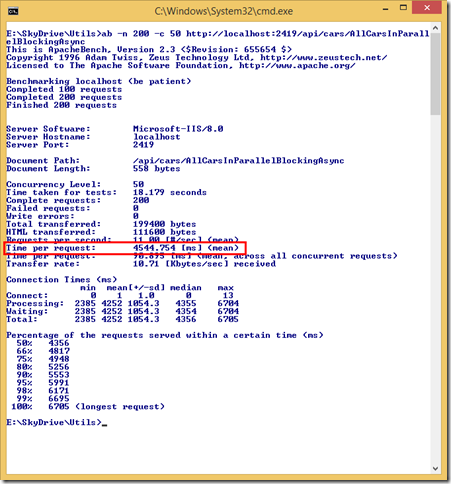

It performed really bad but it gets worse as soon as you increase the concurrency rate:

Never, ever think about implementing this solution. No further discussion is needed here in my opinion.

Asynchronous and In Parallel (In a Non-Blocking Fashion)

Finally, the best solution: Asynchronous and In Parallel (In a Non-Blocking Fashion). The below code snippet indicates it all but just to go through it quickly, we are bundling the Tasks together and await on the Task.WhenAll utility method. This will perform the operations asynchronously in Parallel.

[HttpGet] public async Task<IEnumerable<Car>> AllCarsInParallelNonBlockingAsync() { IEnumerable<Task<IEnumerable<Car>>> allTasks = PayloadSources.Select(uri => GetCarsAsync(uri)); IEnumerable<Car>[] allResults = await Task.WhenAll(allTasks); return allResults.SelectMany(cars => cars); }

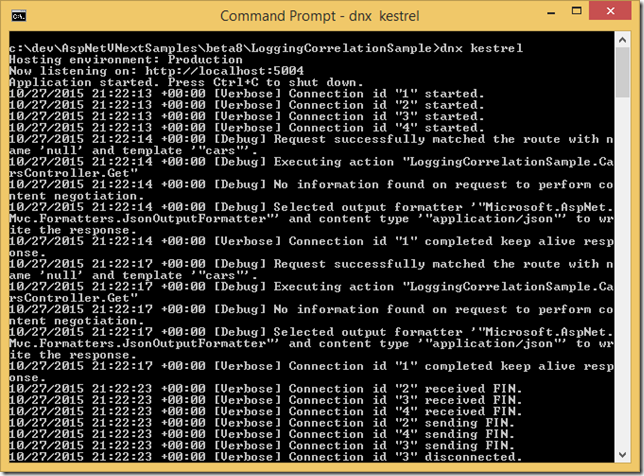

If we make a request to the endpoint to execute this piece of code, the result will be similar to the previous one:

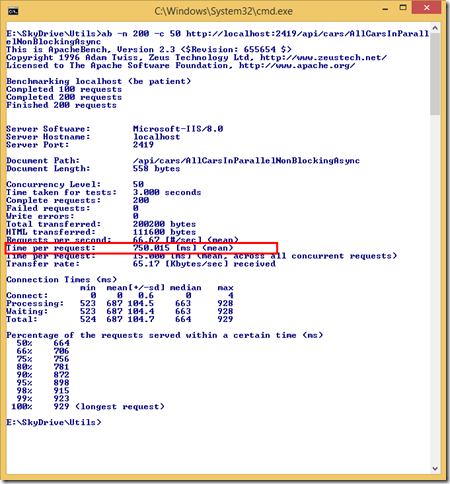

However, when we make 50 concurrent requests 4 times, the result will shine and lays out the advantages of asynchronous I/O handling:

Conclusion

At the very basic level, what we can get out from this article is this: do perform load tests against your server applications based on your estimated consumption rates if you have any sort of multiple I/O operations. Two of the above options are what you would want in case of multiple I/O operations. One of them is "Asynchronous but not In Parallel", which is the safest option in my personal opinion, and the other is "Asynchronous and In Parallel (In a Non-Blocking Fashion)". The latter option significantly reduces the request time depending on the hardware and number of I/O operations you have but as our small benchmarking results showed, it may not be a good fit to process a request many concurrent I/O asynchronous operations in one just to reduce a single request time. The result we would see will most probably be different under high load.